11.5. Remote Memory Access#

In Section 11.2, we introduced two communication modes: two-sided and one-sided. The point-to-point and collective communications discussed in the earlier sections focus on two-sided communication. This section specifically delves into one-sided communication, also known as Remote Memory Access (RMA).

Window#

Any memory allocated by a process is private, meaning it is only accessible by the process itself. To enable remote memory access, exposing a portion of a process’s private memory for access by other processes requires special handling. In MPI, a Window is used to define a memory region that can be accessed remotely. A designated memory region is set to allow remote access, and all processes within the Window can read and write to this shared region. Fig. 11.12 provides a detailed explanation of private memory regions and windows that can be accessed remotely. The mpi4py.MPI.Win class in mpi4py facilitates Window-related operations.

Fig. 11.12 Private memory regions and remotely accessible windows#

Creating a Window#

Window can be created using mpi4py.MPI.Win.Allocate and mpi4py.MPI.Win.Create. The mpi4py.MPI.Win.Allocate method creates a new memory buffer that can be accessed remotely, while mpi4py.MPI.Win.Create designates an existing memory buffer for remote access. Specifically, the distinction lies in the first parameter of these two methods: mpi4py.MPI.Win.Allocate(size) takes size bytes to create a new memory buffer, whereas mpi4py.MPI.Win.Create(memory) takes the memory address memory of an existing buffer.

Read and Write Operations#

Once a Window with remote access is created, three types of methods can be used to read and write data to the memory region: mpi4py.MPI.Win.Put, mpi4py.MPI.Win.Get, and mpi4py.MPI.Win.Accumulate. These methods all take two parameters: origin and target_rank, representing the source process and the target process, respectively. The source process is the one invoking the read/write method, while the target process is the remote process.

Win.Putmoves data from the origin process to the target process.Win.Getmoves data from the target process to the origin process.Win.Accumulateis similar toWin.Put, moving data from the origin process to the target process, while also performing an aggregation operation on the data from the source and target processes. Aggregation operators includempi4py.MPI.SUM,mpi4py.MPI.PROD, and others.

Data Synchronization#

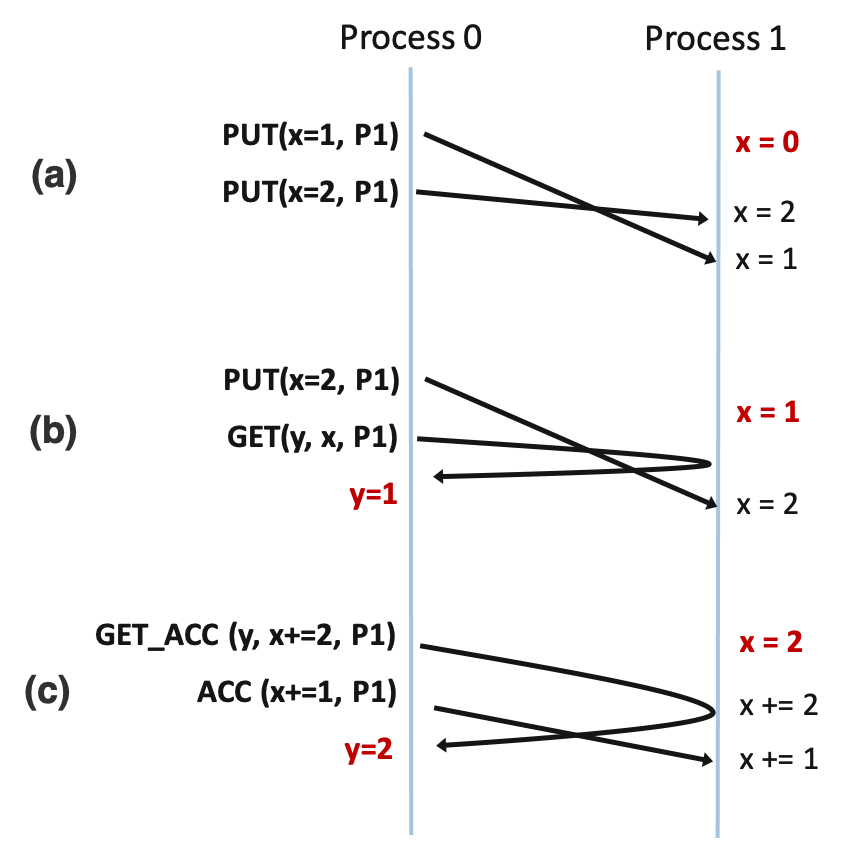

In a single-machine program, execution is sequential. However, in a multi-machine environment where multiple processes are involved in reading and writing data, data synchronization issues may arise. As illustrated in Fig. 11.13, without explicit control over the order of read and write operations, the data in a particular memory region may not yield the expected results.

Fig. 11.13 Data Synchronization in Parallel I/O, P0 and P1 stand for two processed.#

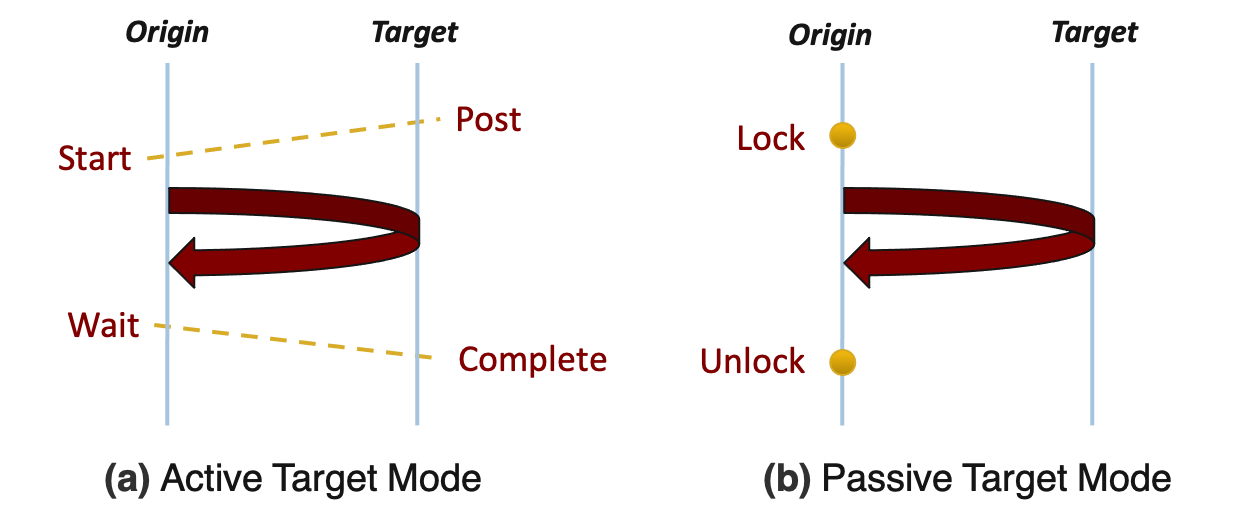

To address this problem, various data synchronization mechanisms are available in MPI, broadly categorized into Active Target Synchronization and Passive Target Synchronization, as illustrated in Fig. 11.14.

Fig. 11.14 Active Target Synchronization and Passive Target Synchronization.#

Example: Remote Read and Write#

A complete RMA (Remote Memory Access) program should encompass the following steps:

Create a window

Implement data synchronization

Perform data read and write operations

Example code for a case study is illustrated in Listing 11.9 and is saved as rma-lock.py.

import numpy as np

from mpi4py import MPI

from mpi4py.util import dtlib

comm = MPI.COMM_WORLD

rank = comm.Get_rank()

datatype = MPI.FLOAT

np_dtype = dtlib.to_numpy_dtype(datatype)

itemsize = datatype.Get_size()

N = 8

win_size = N * itemsize if rank == 0 else 0

win = MPI.Win.Allocate(win_size, comm=comm)

buf = np.empty(N, dtype=np_dtype)

if rank == 0:

buf.fill(42)

win.Lock(rank=0)

win.Put(buf, target_rank=0)

win.Unlock(rank=0)

comm.Barrier()

else:

comm.Barrier()

win.Lock(rank=0)

win.Get(buf, target_rank=0)

win.Unlock(rank=0)

if np.all(buf == 42):

print(f"win.Get successfully on Rank {comm.Get_rank()}.")

else:

print(f"win.Get failed on Rank {comm.Get_rank()}.")

!mpiexec -np 8 python rma_lock.py

win.Get successfully on Rank 4.

win.Get successfully on Rank 5.

win.Get successfully on Rank 6.

win.Get successfully on Rank 7.

win.Get successfully on Rank 1.

win.Get successfully on Rank 2.

win.Get successfully on Rank 3.